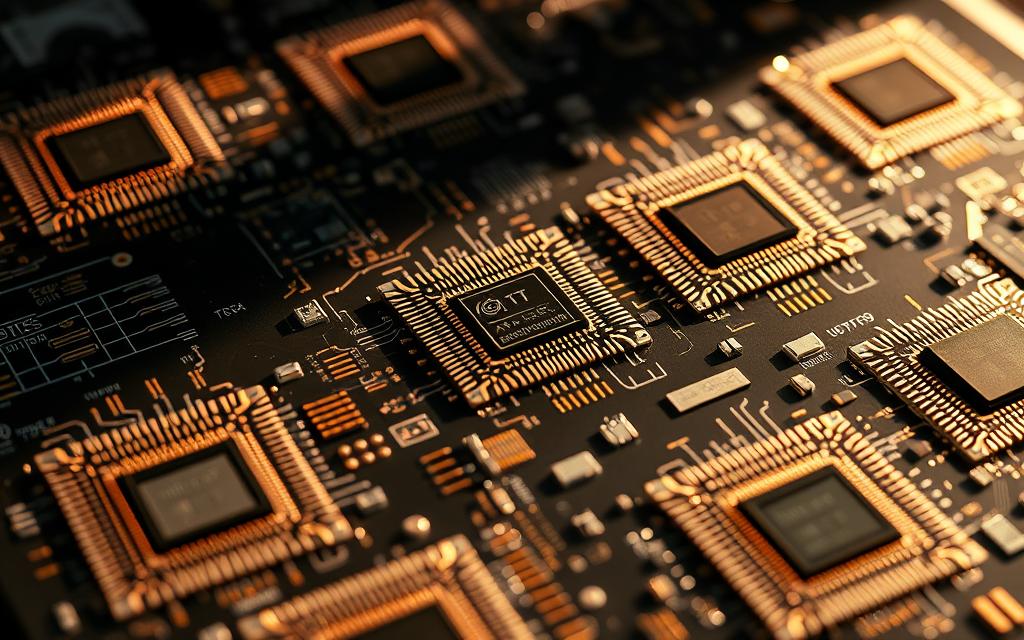

The AI industry is undergoing a transformative shift with the rise of specialized hardware designed for transformer models. These advanced architectures dominate modern AI systems, driving the need for optimized solutions.

Etched’s Sohu ASIC is a game-changer, achieving 500,000 tokens per second for Llama 70B. This performance surpasses traditional GPUs, offering a 10x improvement in transformer workloads. A single 8xSohu server can replace 160 H100 GPUs, with 90% FLOPS utilization.

The market is shifting from flexible GPUs to algorithm-specific ASICs. This move promises significant energy cost savings and better performance. Etched’s $120M funding round highlights the growing importance of transformer-focused silicon.

As AI models standardize around transformer architecture, the demand for specialized chips will only increase. This trend underscores the strategic importance of developing hardware tailored to these models.

Introduction to AI Chip Transformers

The rise of transformer models has reshaped the hardware landscape. These advanced architectures require specialized solutions to handle their unique demands. General-purpose GPUs, once the backbone of computing, are now being replaced by accelerators designed specifically for transformer workloads.

Transformer-based systems demand immense processing power. For example, a single 2048-token batch requires 304 TFLOPs. Memory bandwidth is equally critical, with models needing 140GB for weights alone. These technical challenges have driven the development of tailored hardware.

Continuous batching techniques optimize compute utilization, ensuring efficient processing. TSMC’s 4nm process enables high-density designs, further enhancing performance. These advancements highlight the shift from flexible GPUs to algorithm-specific accelerators.

Energy consumption is another key factor. Transformer operations often require significant power, making efficiency a priority. Architectural specialization simplifies software stacks, reducing overhead and improving performance.

Take the Llama 3 70B model as an example. Its benchmarks demonstrate the capabilities of transformer-specific hardware. This case study underscores the importance of tailored solutions in modern computing.

| Feature | GPU | ASIC |

|---|---|---|

| Control Logic | Complex | Simplified |

| Energy Efficiency | Moderate | High |

| Processing Speed | Fast | Faster |

Over the past five years, the evolution of hardware has been remarkable. From general-purpose designs to specialized accelerators, the focus has shifted to meeting the demands of transformer models. This trend is set to continue, shaping the future of computing.

What Business is Making Transformers for Chip AI Labs?

The race to dominate transformer-specific hardware is heating up. Companies and startups are competing to deliver the most efficient and powerful solutions. This shift is driven by the growing demand for high-performance chips tailored to transformer models.

Etched: Pioneering Transformer-Specific Chips

Etched has emerged as a leader in this space. Their Sohu ASIC is designed specifically for transformer workloads, offering unmatched speed and efficiency. With a focus on reducing energy costs, Etched’s hardware is setting new standards in the market.

Their innovative approach has attracted significant investment, highlighting the potential of transformer-focused silicon. Etched’s first-mover advantage positions them as a key player in this competitive landscape.

Other Key Players in the Market

NVIDIA continues to dominate the GPU market, holding a 95% share in machine learning. Their Blackwell architecture is a strong counter to emerging ASICs. Meanwhile, AMD’s MI300 series is challenging NVIDIA’s H100 in inference benchmarks.

Groq is another notable competitor, achieving impressive token throughput with their LPUs. Cerebras is pushing boundaries with their WSE-3 chip, which contains 4 trillion transistors. These advancements highlight the diversity of solutions in the market.

| Company | Key Innovation | Performance |

|---|---|---|

| Etched | Sohu ASIC | 500,000 tokens/sec |

| NVIDIA | Blackwell Architecture | 95% ML GPU share |

| AMD | MI300 Series | Competes with H100 |

| Groq | LPUs | 70B token throughput |

| Cerebras | WSE-3 | 4 trillion transistors |

The market for transformer-specific hardware is evolving rapidly. With each company bringing unique strengths, the competition is driving innovation and performance to new heights.

Technological Breakthroughs in AI Chip Architecture

Recent advancements in hardware design are redefining the capabilities of modern computing. These innovations address the unique demands of advanced architectures, enabling significant leaps in performance and efficiency. From specialized processing units to energy-saving techniques, the focus is on delivering tailored solutions for complex workloads.

Transformer-Specific Processing Units

Engineering breakthroughs have led to the development of processing units optimized for transformer models. IBM’s analog chips, for example, achieve 7x speed improvements over traditional designs. Reconfigurable chips demonstrate 27.6x energy efficiency gains, making them ideal for high-density applications.

3D chip stacking techniques enhance memory bandwidth, while analog compute-in-memory architectures reduce latency. Sparse attention optimization in silicon further boosts performance, enabling faster sequence processing. These advancements highlight the shift from general-purpose to specialized hardware.

Energy Efficiency Innovations

Energy consumption is a critical factor in modern computing. Liquid cooling solutions for high-density racks ensure optimal thermal management. The adoption of 8-bit floating point precision reduces power usage without compromising accuracy.

Near-memory compute architectures minimize data movement, improving efficiency. Photonic computing integration and phase-change memory applications offer promising prospects for future designs. These innovations collectively reduce thermal design power (TDP) while maintaining high performance.

| Innovation | Impact |

|---|---|

| 3D Chip Stacking | Increased memory bandwidth |

| Analog Compute-in-Memory | Reduced latency |

| Liquid Cooling | Improved thermal management |

| 8-bit Floating Point | Lower power consumption |

| Photonic Computing | Future efficiency gains |

These technological breakthroughs are reshaping the landscape of computing. By focusing on efficiency and performance, engineers are delivering solutions that meet the demands of modern workloads. The future of hardware design lies in specialization and innovation.

Market Dynamics and Growth Projections

The global market for specialized hardware is experiencing unprecedented growth, driven by the increasing demand for high-performance solutions. Over the next few years, the industry is expected to see significant investments, with over $100B committed to AI infrastructure through 2026. This surge reflects the growing need for advanced compute solutions tailored to modern workloads.

Investment Landscape in AI Chip Development

Venture capital is flowing into AI hardware startups at an accelerating pace. Hyperscalers are also investing heavily in captive chip development, aiming to reduce reliance on third-party suppliers. Geopolitical factors, such as export control regulations on advanced nodes, are shaping supply chains and influencing investment strategies.

Foundry capacity allocation remains a challenge, with TSMC and Samsung competing for dominance. Price-performance curves for inference workloads are improving, making specialized hardware more attractive. Edge AI adoption is rising across industries, driven by the need for real-time data processing.

Future Market Trends

The generative AI chip market is projected to reach $0.78B by 2029, highlighting the potential for growth. Memory pricing volatility and carbon footprint regulations are influencing hardware designs. ROI timelines for transformer-specific infrastructure are becoming shorter, encouraging further investment.

- Venture capital flow into AI hardware startups

- Hyperscaler captive chip development trends

- Geopolitical factors in semiconductor supply chains

- Foundry capacity allocation challenges (TSMC vs Samsung)

- Price-performance curves for inference workloads

As the industry evolves, companies are focusing on delivering efficient and scalable solutions. The shift towards specialized hardware is set to redefine the market, offering new opportunities for innovation and growth.

Applications and Industry Impact

Specialized hardware is revolutionizing multiple sectors with its advanced capabilities. From healthcare to finance and manufacturing, tailored solutions are driving efficiency and performance. These innovations are transforming how industries operate, delivering real-world impact through cutting-edge tech.

Healthcare and Pharmaceutical Applications

In healthcare, specialized hardware is enabling breakthroughs in medical imaging and drug discovery. Real-time analysis systems are improving diagnostic accuracy, while AI-generated clinical trial simulations accelerate research. Companies like Insilico Medicine are leveraging these advancements to optimize personalized medicine dosage and streamline drug development pipelines.

Financial Services Implementation

The financial sector is benefiting from enhanced fraud detection and automated report generation. High-frequency trading platforms now meet stringent latency requirements, thanks to advanced processing units. JPMorgan’s anti-money laundering systems use pattern detection models to ensure compliance and security. These innovations are reshaping how financial institutions handle data and power their operations.

Manufacturing and Industrial Uses

Manufacturing is seeing significant improvements through predictive maintenance and quality control systems. Smart factories utilize AI-driven models to forecast supply chain demands and optimize production. Tesla’s Full Self-Driving (FSD) transformer model deployment exemplifies how industrial applications benefit from specialized hardware. Digital twin simulations further enhance design and operational efficiency.

- Real-time medical imaging analysis systems

- High-frequency trading latency requirements

- Predictive maintenance in smart factories

- AI-generated clinical trial simulations

- Automated financial report generation

- Computer vision quality control systems

- Personalized medicine dosage optimization

- Anti-money laundering pattern detection

- Supply chain demand forecasting models

- Digital twin simulation capabilities

Conclusion

The future of computing lies in specialized hardware designed for advanced models. Etched leads this shift with its Sohu ASIC, built on TSMC’s 4nm process. This innovation delivers unmatched efficiency and performance, setting new standards in the market.

With reserved customer commitments exceeding $10M, Etched’s first-mover advantage in ASIC specialization is clear. Their focus on tailored architecture ensures optimal compute capabilities for modern workloads. This positions them as a key player in the evolving landscape.

As companies adopt these solutions, workforce skill development and ethical considerations become critical. The environmental impact of high-density compute also demands attention. Enterprises should explore multi-vendor strategies to mitigate ecosystem lock-in risks.

Etched’s early access programs offer a chance to stay ahead. By embracing these advancements, organizations can drive innovation and secure their place in the next decade of development.

FAQ

Who designs hardware for transformer models in AI labs?

Companies like NVIDIA, AMD, and specialized startups focus on creating GPUs and architectures tailored for transformer models, enhancing performance and efficiency.

What makes transformer-specific chips unique?

These chips are optimized for processing large language models, offering higher compute power and energy efficiency compared to traditional GPUs.

How do transformer models impact AI development?

They enable faster data processing, improved language understanding, and better performance in tasks like image and video analysis.

What are the key innovations in AI chip architecture?

Recent advancements include transformer-specific processing units and designs that reduce energy consumption while boosting compute capabilities.

Which industries benefit most from transformer-based AI chips?

Healthcare, finance, and manufacturing sectors leverage these chips for tasks like drug discovery, fraud detection, and automation.

What is the market outlook for AI chip development?

The market is growing rapidly, driven by increasing demand for AI applications and investments in cutting-edge hardware technologies.

How do companies ensure efficiency in transformer models?

By optimizing architectures for specific tasks, reducing token processing time, and improving tokens per second performance.

What role do GPUs play in transformer model development?

GPUs like NVIDIA’s H100 provide the compute power needed to train and run large language models efficiently.

What are the challenges in designing transformer-specific hardware?

Balancing performance, energy efficiency, and scalability while meeting the demands of complex AI workloads.

How do transformer models influence the future of computing?

They drive innovation in hardware and software, shaping the next generation of artificial intelligence technologies.