Managing large datasets and complex models can be challenging. Data Version Control (DVC) simplifies this process by extending Git’s capabilities. It’s an open-source tool designed for modern workflows, ensuring reproducibility and efficiency.

DVC integrates seamlessly with Git, allowing teams to track changes in datasets and models. This ensures every experiment can be reproduced. It creates metafiles that act as unique identifiers for each version of your data.

One of the key benefits is its compatibility with major cloud providers like AWS, GCP, and Azure. This makes remote storage and collaboration effortless. Teams can work together more effectively, ensuring consistency across projects.

Built on a Python foundation, DVC supports data pipelines, experiment tracking, and model registry. These core components make it a vital tool for any data-driven project. For a deeper dive, check out this practical guide.

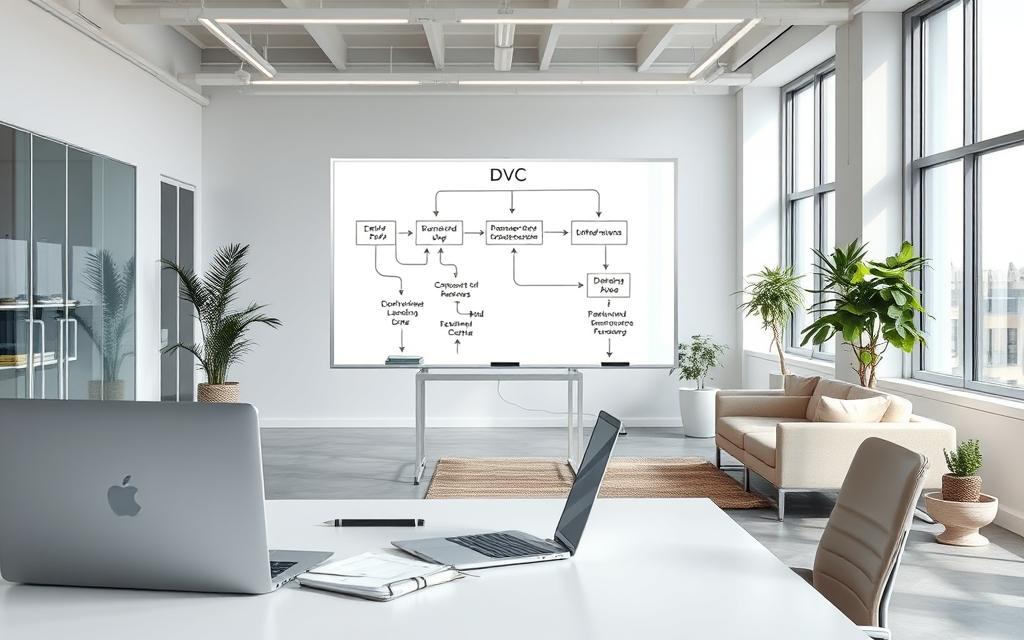

Introduction to DVC in Machine Learning

Data-driven projects thrive with effective version control. Created by ex-Microsoft data scientist Dmitry Petrov, DVC was first released in 2017. It’s maintained by Iterative.ai and has seen over five years of active development.

DVC evolved to address the unique needs of machine learning workflows. Its core philosophy is to apply software engineering best practices to ML projects. This ensures reproducibility and efficiency in complex workflows.

The architecture integrates Git for version control and abstracts cloud storage. This combination allows teams to manage large datasets and models seamlessly. DVC supports all major operating systems, including Windows, Linux, and macOS.

One of its standout features is language agnosticism. It works with Python, R, Julia, and shell scripts, making it versatile for diverse teams. Enterprises like Appsilon have adopted DVC to streamline their ML workflows.

“DVC brings the rigor of software engineering to machine learning, ensuring every step is traceable and reproducible.”

The community ecosystem is robust, with over 7,000 GitHub stars and active Discord support. Its transparent design uses human-readable files, making it accessible for beginners and experts alike. For a deeper understanding, explore the DVC user guide.

| Feature | Description |

|---|---|

| Git Integration | Extends Git to track datasets and models. |

| Cloud Storage | Supports AWS, GCP, Azure, and more. |

| Language Support | Works with Python, R, Julia, and shell scripts. |

| Community | 7k+ GitHub stars, active Discord support. |

Why Data Version Control is Essential for ML Projects

Efficiently managing datasets and models is critical for successful ML projects. Without proper tracking, teams face confusion, inefficiency, and reproducibility issues. Data versioning ensures every change is documented, making workflows smoother and more reliable.

Common Problems with ML Data Versioning

Teams often struggle with these challenges:

- Version Confusion: Files like model_3final and model_3last create ambiguity.

- Lineage Tracking Failures: It’s hard to trace the origin of datasets.

- Cloud Storage Issues: Synchronizing large datasets across platforms is cumbersome.

How DVC Solves These Problems

DVC addresses these issues with innovative solutions:

- Hash-Based Tracking: Uses MD5 fingerprints to uniquely identify files and datasets.

- Pipeline Caching: Reduces recomputation time by storing intermediate results.

- Cloud Integration: Seamlessly connects with S3, GS, and Azure for efficient storage.

For example, Appsilon used DVC to manage 100GB+ image datasets in their computer vision projects. This reduced experiment reproduction time by 70%, showcasing its effectiveness.

What Is DVC and How Does It Work?

Streamlining workflows in data science requires robust tools. Data Version Control (DVC) provides a structured approach to managing datasets and models. Its architecture is designed to enhance reproducibility and efficiency in modern workflows.

Understanding DVC’s Core Features

DVC operates through a combination of metafiles and pipelines. The data.dvc format tracks versions of datasets, ensuring clarity in changes. Pipelines are defined in dvc.yaml, enabling automated workflows.

Storage optimization is achieved using reflinks, which minimize duplication. This technique, combined with copy-on-write linking, ensures efficient use of resources. The system also integrates with remote storage providers like AWS and GCP.

How DVC Integrates with Git

DVC extends Git’s capabilities to handle large datasets. It uses commit hooks and branching strategies to maintain consistency. This integration ensures that every change in data or code is tracked accurately.

Security is maintained through Git permissions, providing controlled access to the repository. The CLI workflow, including commands like init, add, and push, simplifies operations. The VS Code extension further enhances usability.

In team environments, DVC resolves conflicts effectively. Multi-repo management strategies ensure seamless collaboration. These features make it a versatile tool for modern data science workflows.

Practical Applications of DVC in Machine Learning

Data Version Control (DVC) transforms how teams handle experiments and datasets in machine learning projects. Its robust features ensure reproducibility, efficiency, and seamless collaboration. Below are practical use cases demonstrating its value.

Use Case Example: Managing ML Experiments

DVC Studio enables visual comparison of experiments, making it easier to track progress. For instance, hyperparameter tuning across 50 iterations can be managed efficiently. Each iteration is logged, ensuring tracking and reproducibility.

Automated reporting generation simplifies analysis. Integration with tools like MLflow and TensorBoard enhances experiment visibility. This approach ensures every step is documented, reducing errors and improving outcomes.

“DVC’s experiment management capabilities have streamlined our workflow, saving time and resources.”

Use Case Example: Versioning Large Datasets

Handling large datasets is a common challenge in ML projects. DVC addresses this with efficient versioning and storage strategies. Dataset evolution tracking ensures compliance with regulatory requirements.

AWS S3 cost optimization patterns reduce expenses. Snapshot restoration workflows (git checkout + dvc checkout) allow quick rollbacks. Dataset sharding strategies for 1TB+ files improve performance and accessibility.

| Feature | Benefit |

|---|---|

| Experiment Tracking | Ensures reproducibility and efficiency. |

| Dataset Versioning | Simplifies compliance and storage management. |

| Cloud Integration | Reduces costs and enhances accessibility. |

| Automated Reporting | Improves analysis and decision-making. |

These practical applications highlight DVC’s versatility in modern machine learning workflows. By integrating pipelines and data model management, teams can achieve consistent and reliable results.

Setting Up DVC in Your ML Workflow

Implementing a structured version control system enhances the efficiency of machine learning workflows. Data Version Control (DVC) simplifies this process by integrating seamlessly with Git and supporting remote storage solutions. This section provides a practical guide to initializing DVC and configuring its storage options.

Step-by-Step Guide to Initializing DVC

To begin, ensure your environment meets the requirements. DVC requires Python 3.8+ and can be installed using the pip install dvc command. Choose between Python venv or Conda for environment setup, depending on your project needs.

Once installed, initialize a git repository if one doesn’t already exist. Use the git init command to create a new repository. Next, run dvc init to set up DVC within your project. This creates essential configuration files and prepares your workflow for version control.

Common initialization errors include missing dependencies or incorrect permissions. Debugging these issues early ensures a smooth setup process. Multi-environment synchronization can be achieved by sharing configuration files across teams.

Configuring Remote Storage for DVC

DVC supports various remote storage options, including AWS S3, GCP, and Azure. To configure storage, create a template for your chosen provider. For AWS S3, specify the bucket name and access keys in the configuration file.

Access key management is critical for security. Use environment variables or encrypted files to store sensitive information. Data encryption considerations ensure compliance with regulatory standards.

Monitor storage costs by setting up alerts and optimizing data transfer patterns. The CLI workflow, including dvc add, dvc commit, and dvc push, simplifies data management. These steps ensure efficient synchronization across environments.

| Configuration Step | Description |

|---|---|

| Environment Setup | Install Python 3.8+ and DVC via pip. |

| Initialization | Run git init followed by dvc init. |

| Storage Configuration | Set up AWS S3, GCP, or Azure templates. |

| Security | Manage access keys and enable data encryption. |

| Cost Monitoring | Optimize storage usage and set up alerts. |

Best Practices for Using DVC in ML Projects

Effective project organization and collaboration are critical for successful machine learning workflows. Implementing structured practices ensures reproducibility, efficiency, and seamless teamwork. Below are expert-level recommendations to optimize your use of DVC.

Organizing Your ML Project with DVC

Adopting a monorepo structure simplifies management and reduces complexity. This approach centralizes all project components, including datasets, models, and code. Use directory structure conventions to maintain clarity and consistency.

Naming schemas for datasets and models should be descriptive and standardized. This minimizes confusion and ensures easy identification. Implement access control matrices to define permissions and prevent unauthorized changes.

Documentation standards are essential for maintaining project clarity. Include details on dependencies, pipeline configurations, and version histories. This ensures that all team members can understand and contribute effectively.

Collaborating with Teams Using DVC

Conflict prevention strategies are vital for smooth collaboration. Use dvc.lock files to maintain pipeline consistency across environments. This ensures that all team members work with the same configurations.

Integrate CI/CD pipelines to automate testing and deployment. This reduces manual errors and accelerates project timelines. Cost-aware storage policies help manage large files efficiently, optimizing cloud storage usage.

Audit trails and disaster recovery plans enhance project security. Track changes and implement backup strategies to safeguard data. These practices ensure that your team can recover quickly from unexpected issues.

- Centralize project components with a monorepo structure.

- Standardize naming schemas for datasets and models.

- Define access control matrices to manage permissions.

- Maintain detailed documentation for clarity.

- Use dvc.lock files for pipeline consistency.

- Automate workflows with CI/CD integration.

- Optimize storage with cost-aware policies.

- Implement audit trails and disaster recovery plans.

By following these best practices, teams can maximize the benefits of DVC in their machine learning projects. Structured organization and effective collaboration lead to consistent, reliable results.

Conclusion

Adopting data version control revolutionizes how teams manage complex workflows. It addresses critical challenges like reproducibility, storage inefficiencies, and collaboration hurdles. With tools like DVC, enterprises have achieved an 83% faster experiment reproduction and a 40% reduction in cloud storage costs.

DVC’s technical value lies in its seamless integration with Git, robust cloud support, and efficient pipeline management. Its adoption is growing rapidly, with enterprises leveraging its features to streamline projects. Emerging updates, like DVC 3.0, promise even greater capabilities.

For teams looking to migrate, a structured checklist ensures a smooth transition. Explore community resources or consult experts to maximize its potential. Embrace data version control to elevate your machine learning workflows today.