Convolutional neural networks (CNNs) have revolutionized the field of machine learning, particularly in image processing. These specialized models mimic the human visual cortex, enabling them to detect patterns and features with remarkable accuracy.

Often classified under deep learning, CNNs incorporate multiple layers, such as convolutional and pooling layers, to extract hierarchical features. This depth allows them to handle complex tasks, from object recognition to medical imaging.

Modern architectures like ResNet and VGGNet showcase the power of these networks. Companies like NVIDIA have further enhanced their computational efficiency, making them indispensable in today’s tech landscape.

While debates exist about the exact depth required for a network to be “deep,” IBM and other experts classify CNNs as part of the deep learning family. Their ability to process vast amounts of data and learn intricate patterns solidifies their place in advanced neural networks.

Introduction to Convolutional Neural Networks (CNNs)

The development of convolutional neural networks has transformed how machines interpret visual data. These specialized models excel in tasks like image classification, making them indispensable in fields ranging from healthcare to autonomous driving.

What is a CNN?

A convolutional neural network operates as a feedforward system, using filters to optimize feature extraction. Key principles like shift invariance and weight sharing allow these models to process visual inputs efficiently. By analyzing feature maps, they identify patterns without requiring extensive pre-processing.

Brief History of CNNs

The origins of convolutional neural networks trace back to Fukushima’s Neocognitron in 1980, a prototype inspired by biological vision. Yann LeCun later advanced the field in 1989 by applying backpropagation to recognize handwritten ZIP codes. His work culminated in LeNet-5, a model widely used for check recognition systems.

The 2012 ImageNet competition marked a turning point. AlexNet, a groundbreaking neural network, demonstrated the potential of GPUs for training complex models. This event accelerated the adoption of convolutional neural networks across industries.

Modern architectures like ResNet and VGGNet build on these foundations, offering deeper layers and improved accuracy. NVIDIA’s contributions to GPU-accelerated training have further enhanced their computational efficiency.

Understanding Deep Neural Networks

Deep neural networks represent a significant leap in machine learning capabilities. These systems process data through multiple nonlinear layers, enabling them to learn complex patterns. Unlike traditional methods, they automate feature extraction, reducing the need for manual engineering.

Definition of Deep Neural Networks

A deep neural network typically includes three or more hidden layers. This depth allows for hierarchical learning, where each layer extracts increasingly abstract features. IBM defines these systems as part of the deep learning family, emphasizing their ability to handle vast datasets.

Key Characteristics of Deep Neural Networks

These systems rely on layers to process information. Each layer transforms input data, creating a hierarchy of features. NVIDIA’s GPU technology plays a crucial role in accelerating training, making these models computationally efficient.

One major challenge is the vanishing gradient problem. Solutions like ReLU activation functions help maintain stable gradients during training. Weight sharing further enhances parameter efficiency, reducing the computational load.

| Aspect | Deep Neural Networks | Shallow Networks |

|---|---|---|

| Layers | 3+ hidden layers | 1-2 hidden layers |

| Feature Learning | Automatic | Manual |

| Computational Needs | High (GPU-dependent) | Low |

Deep neural networks excel in tasks requiring hierarchical learning. Their massive parameter count and GPU dependence make them ideal for advanced applications. By leveraging these characteristics, they continue to push the boundaries of deep learning.

Is CNN a Deep Neural Network?

Layer depth plays a pivotal role in defining the capabilities of convolutional architectures. These systems rely on multiple stages to process visual data, making them highly effective for tasks like image recognition. The question of whether they qualify as deep neural networks often arises, given their layered structure.

Exploring the Depth of CNNs

Historical models like LeNet-5 featured seven layers, a significant leap at the time. AlexNet expanded this to eight, with five convolutional stages. Modern architectures, such as ResNet, now boast over 150 layers. This progression highlights the increasing complexity of these systems.

Successive convolution and pooling stages enable these models to extract hierarchical features. Each layer builds on the previous one, refining the representation of input data. This approach allows for efficient processing of complex visual patterns.

Comparing CNNs to Other Deep Neural Networks

Convolutional architectures excel in parameter efficiency compared to fully connected systems. By sharing weights across layers, they reduce the number parameters required. This optimization makes them ideal for large-scale visual tasks.

Depth-width tradeoffs also play a crucial role. While deeper models capture more intricate features, they demand greater computational resources. NVIDIA’s advancements in GPU technology have addressed these challenges, enabling faster training times.

Unlike recurrent systems, which focus on temporal depth, convolutional models prioritize spatial hierarchies. IBM classifies them as part of the deep learning family, emphasizing their ability to handle vast datasets. This perspective underscores their importance in modern machine learning.

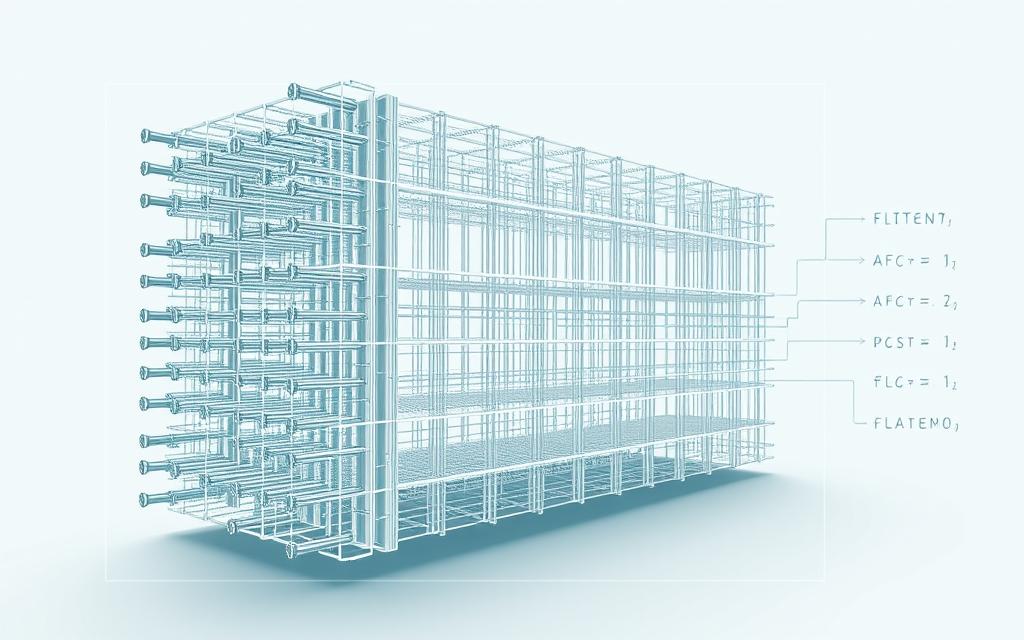

Core Components of CNNs

At the heart of modern image processing lies a trio of essential components. These layers work together to extract, refine, and classify visual data with precision. By understanding their roles, we can appreciate the efficiency of convolutional architectures.

Convolutional Layers

Convolutional layers serve as the primary feature extractors. They process input data using filters that slide across the input image, applying the Frobenius inner product. Stride and padding parameters control how these filters move, ensuring comprehensive coverage.

Post-convolution, ReLU transformations introduce non-linearity, enhancing the model’s ability to capture complex patterns. Filter sizes like 3×3 and 5×5 offer tradeoffs between detail and computational efficiency. Fukushima’s original S-layer and C-layer concepts laid the groundwork for these operations.

Pooling Layers

Pooling layers reduce the dimensionality of feature maps, making the system more efficient. Max pooling selects the highest value within a window, while average pooling computes the mean. These operations preserve essential features while minimizing redundancy.

IBM highlights the importance of weight sharing in these layers, reducing the number of parameters. NVIDIA’s depthwise separable convolutions further optimize performance, enabling faster processing of visual data.

Fully Connected Layers

Fully connected layers play a crucial role in classification. They combine features extracted by previous layers to produce final predictions. LeNet-5, one of the earliest architectures, demonstrated this process effectively.

These layers transform high-dimensional data into actionable insights. By leveraging hierarchical feature extraction, they ensure accurate classification across diverse datasets.

How CNNs Learn Features

Hierarchical feature extraction lies at the core of modern visual recognition systems. These systems transform raw data into meaningful patterns, enabling accurate classification and detection. By understanding this process, we can appreciate the efficiency of convolutional architectures.

Feature Extraction Process

Visual data undergoes a transformative journey, starting with edge detection. Initial layers identify simple features like lines and curves. Subsequent layers build on this foundation, recognizing textures and shapes. Finally, higher layers detect complex objects, forming a hierarchy of patterns.

This process mimics the human visual cortex, as described by Hubel and Wiesel. Their research on biological receptive fields inspired Fukushima’s Neocognitron, a precursor to modern convolutional systems. IBM emphasizes that this hierarchical approach reduces the need for manual feature engineering.

Role of Filters and Kernels

Filters and kernels play a pivotal role in feature extraction. These small matrices slide across input data, identifying specific patterns. Kernel weights optimize through backpropagation, enhancing the model’s accuracy. NVIDIA’s advancements in GPU technology have accelerated this optimization, making it more efficient.

Initialization methods like Xavier and He ensure stable training. These techniques set initial weights to prevent vanishing gradients. As layers deepen, filters become more specialized, capturing intricate details. For example, VGG16’s architecture progresses from 64 to 512 channels, reflecting this increasing complexity.

However, deeper layers may exhibit filter redundancy. Some kernels detect similar patterns, reducing overall efficiency. Addressing this challenge remains a focus of ongoing research, with IBM and NVIDIA leading the way in kernel optimization.

Architecture of CNNs

The architecture of convolutional systems plays a critical role in their ability to process visual data effectively. These systems rely on a structured hierarchy of layers to transform raw input into meaningful patterns. Each stage contributes to the overall efficiency and accuracy of the network.

Input Layer and Image Processing

The input layer serves as the gateway for visual data. A 100×100 RGB image, for example, converts into a 100×100×3 tensor. Normalization techniques ensure consistent data scaling, improving training stability. Channel-wise processing allows the system to handle both RGB and grayscale images efficiently.

NVIDIA’s optimized input pipelines further enhance preprocessing speed. These advancements reduce latency, enabling faster data ingestion. Medical imaging adaptations, such as 3D convolutions, demonstrate the versatility of these systems.

Hidden Layers and Feature Maps

Hidden layers extract hierarchical features through successive convolutions. Each filter generates a feature map, with the number of channels corresponding to the filter count. Receptive fields expand progressively, capturing increasingly complex patterns.

IBM’s research highlights the importance of layer complexity progression. Deeper layers refine feature extraction, enabling precise recognition. This approach minimizes redundancy while maximizing computational efficiency.

Output Layer and Classification

The output layer finalizes the classification process. Softmax activation assigns probabilities to each class, ensuring accurate predictions. Fully connected layers, like those in AlexNet, combine features for comprehensive analysis.

Global pooling offers an alternative to fully connected layers, reducing parameter count. Output node configurations align with class counts, ensuring precise results. This structure solidifies the system’s ability to handle diverse datasets.

Training CNNs

Training convolutional systems requires a combination of advanced techniques and computational resources. These systems rely on backpropagation to adjust weights and improve accuracy. By leveraging the chain rule, gradients flow through each layer, ensuring precise updates.

Cross-entropy loss serves as a common metric for classification tasks. It measures the difference between predicted and actual outputs, guiding the training process. NVIDIA’s mixed-precision approach enhances computational efficiency, reducing memory usage while maintaining accuracy.

Backpropagation in CNNs

Backpropagation plays a crucial role in optimizing convolutional systems. It calculates gradients for each layer, enabling weight adjustments. Yann LeCun’s 1989 implementation laid the foundation for this process, making it a cornerstone of modern training.

Batch normalization stabilizes learning by normalizing layer inputs. This technique reduces internal covariate shift, improving performance. IBM highlights its importance in preventing vanishing gradients, ensuring stable updates across layers.

Gradient Descent and Optimization

Gradient descent drives the optimization process, minimizing loss functions. Stochastic gradient descent (SGD) updates weights after each batch, while adaptive methods like Adam combine momentum and learning rate adjustments. These strategies enhance model convergence.

Learning rate scheduling further refines training. Techniques like step decay and cosine annealing adjust rates dynamically, balancing speed and accuracy. Weight decay regularization prevents overfitting, ensuring robust performance on unseen data.

Data augmentation expands training datasets by applying transformations like rotation and scaling. This approach improves generalization, enabling models to handle diverse inputs effectively. NVIDIA’s GPU advancements accelerate these processes, making large-scale training feasible.

Applications of CNNs

From healthcare to entertainment, these models drive innovation across industries. Their ability to process visual data efficiently makes them indispensable for various tasks. Whether it’s identifying faces in photos or diagnosing diseases, the value of these systems is undeniable.

Image and Video Recognition

Facial recognition systems like Facebook’s DeepFace achieve 97.35% accuracy. These pipelines analyze features like eyes and noses to identify individuals. Hybrid architectures, such as CNN-LSTM models, extend these capabilities to video analysis. They track movements and detect anomalies in real-time.

Autonomous vehicles rely on these systems for object detection. By processing live feeds, they navigate roads safely. IBM’s computer vision applications further enhance these operations, enabling smarter decision-making.

Natural Language Processing

Convolutional systems also excel in text analysis. They classify documents, detect sentiment, and even generate summaries. Financial institutions use these models for time series forecasting, predicting market trends with precision.

Social media platforms leverage these systems for content moderation. They filter harmful posts and ensure compliance with community guidelines. Satellite imagery analysis benefits from these tasks, enabling accurate land-use mapping.

Medical Image Analysis

NVIDIA Clara revolutionizes radiology with advanced imaging tools. U-Net architectures segment tumors and organs, aiding in precise diagnoses. COVID-19 detection in CT scans showcases the life-saving potential of these systems.

These models reduce manual effort, improving efficiency in healthcare. Their ability to handle complex operations ensures accurate results, enhancing patient care.

| Application | Key Features | Industry Impact |

|---|---|---|

| Facial Recognition | High accuracy, real-time processing | Security, social media |

| Video Analysis | Motion tracking, anomaly detection | Autonomous vehicles, surveillance |

| Medical Imaging | Tumor segmentation, disease detection | Healthcare, diagnostics |

Advantages of CNNs

Modern visual recognition systems benefit significantly from the efficiency of convolutional architectures. These models excel in handling complex visual data, offering a streamlined approach to feature extraction and classification. Their ability to automate tasks traditionally requiring manual intervention sets them apart.

Efficiency in Image Processing

Convolutional systems achieve over 90% accuracy on datasets like ImageNet. This performance stems from their ability to learn features automatically, eliminating the need for manual engineering. Fukushima’s original goals of reducing computational complexity are now realized through advancements like weight sharing and GPU acceleration.

Real-time processing capabilities further enhance their utility. Applications like facial recognition and autonomous driving rely on this efficiency for instant decision-making. NVIDIA’s GPU optimizations ensure these systems handle large datasets without compromising speed.

Reduction in Pre-processing Requirements

Traditional methods often require extensive pre-processing to extract meaningful features. Convolutional architectures, however, minimize this need. IBM highlights their ability to process raw data directly, reducing both time and resource consumption.

Mobile optimizations, such as SqueezeNet, demonstrate how these systems adapt to limited hardware. By reducing parameter counts, they maintain high performance while lowering computational demands. This approach makes them ideal for devices with restricted processing power.

| Aspect | Manual Feature Engineering | Learned Feature Engineering |

|---|---|---|

| Time | High | Low |

| Accuracy | Variable | Consistent |

| Resource Use | High | Low |

Translation invariance is another key benefit. Convolutional systems recognize patterns regardless of their position in the input, reducing the need for alignment. This efficiency ensures robust performance across diverse applications.

Post-2012, accuracy improvements have solidified their role in advanced visual tasks. By leveraging these advantages, convolutional architectures continue to push the boundaries of modern machine learning.

Challenges in CNNs

Despite their advancements, convolutional architectures face significant hurdles in practical applications. These challenges range from computational demands to ensuring robust performance. Addressing these issues is crucial for scaling these systems effectively.

Computational Complexity

Modern architectures like ResNet-152, with 60 million parameters, demand substantial resources. GPU memory constraints often limit the complexity of these systems. Training time versus accuracy tradeoffs further complicate development.

Deep models exhibit a hunger for data, requiring extensive datasets for optimal performance. IBM highlights the need for efficient resource allocation to manage these demands. NVIDIA’s distributed training solutions offer some relief, but challenges persist.

- High memory usage limits scalability.

- Extended training times can hinder progress.

- Quantization techniques reduce precision, impacting accuracy.

Overfitting and Regularization

Overfitting remains a critical issue, especially in complex models. Techniques like dropout, with rates of 0.5 in fully connected layers, help mitigate this. L1 and L2 regularization methods also play a vital role in maintaining balance.

IBM warns that insufficient regularization can lead to poor generalization. Adversarial attacks exploit vulnerabilities, compromising system reliability. Class imbalance further complicates operations, requiring specialized solutions.

- Dropout reduces overfitting by randomly deactivating neurons.

- L1 regularization promotes sparsity, simplifying the model.

- L2 regularization penalizes large weights, improving stability.

Addressing these challenges ensures that convolutional architectures remain effective and scalable. Innovations in resource management and regularization techniques continue to push the boundaries of what these systems can achieve.

CNNs vs. Other Neural Networks

Understanding the differences between convolutional systems and other models reveals their unique strengths. Each architecture excels in specific tasks, making them suitable for distinct applications. By comparing their designs, we can identify the best approach for various challenges.

Comparison with Recurrent Neural Networks (RNNs)

Recurrent systems specialize in sequential data, such as time series or text. They process information temporally, making them ideal for language modeling or speech recognition. In contrast, convolutional models focus on spatial hierarchies, excelling in image and video analysis.

Parameter counts differ significantly. RNNs often require more resources due to their sequential process. NVIDIA’s frameworks optimize both architectures, but convolutional systems typically handle visual data more efficiently. Hybrid models, like CNN-LSTM, combine their strengths for complex tasks.

Comparison with Feedforward Neural Networks

Feedforward systems lack the spatial hierarchy of convolutional models. They process data in a single direction, limiting their ability to extract complex features. IBM recommends convolutional architecture for visual tasks, as it captures patterns more effectively.

Vanishing gradients pose a challenge in deeper feedforward systems. Convolutional models mitigate this issue through techniques like ReLU activation. Accuracy differences are evident on datasets like MNIST, where convolutional systems outperform feedforward counterparts.

By leveraging the strengths of each approach, developers can choose the right model for their specific needs. This ensures optimal performance across diverse applications.

Evolution of CNN Architectures

From LeNet to ResNet, the progression of convolutional models has been remarkable. These systems have evolved to handle increasingly complex tasks, driven by innovations in architecture and hardware. Each milestone has contributed to their growing capabilities in visual recognition.

LeNet to AlexNet

LeNet-5, introduced in 1998, marked the beginning of convolutional models. It featured seven layers and demonstrated the potential of these systems for handwritten digit recognition. The adoption of ReLU activation significantly improved performance, enabling faster training.

AlexNet’s 2012 breakthrough on the ImageNet dataset was a turning point. With an error rate of 16.4%, it showcased the power of GPU acceleration. This large scale success paved the way for deeper and more complex architectures.

Modern Architectures like ResNet and VGGNet

VGG16, with 138 million parameters, emphasized depth in architecture. Its uniform structure of 3×3 filters improved feature extraction. However, the computational demands highlighted the need for efficiency-focused designs.

ResNet introduced residual connections, addressing the vanishing gradient problem. These connections allowed for training extremely deep models, with ResNet-152 achieving state-of-the-art accuracy on ImageNet. IBM’s comparisons highlight the performance gains of these innovations.

| Architecture | Key Features | Impact |

|---|---|---|

| LeNet-5 | 7 layers, ReLU activation | Pioneered convolutional systems |

| AlexNet | GPU acceleration, 16.4% error | Revolutionized large scale recognition |

| VGG16 | 138 million parameters, 3×3 filters | Emphasized depth in architecture |

| ResNet | Residual connections, 152 layers | Enabled training of very deep models |

NVIDIA’s hardware co-design has been instrumental in advancing these systems. Efficiency-focused designs like MobileNet further optimize resource use, ensuring scalability. The evolution of convolutional architectures continues to push the boundaries of visual recognition.

Role of CNNs in Deep Learning

Convolutional models have become a cornerstone in the field of visual data processing. These systems are a vital subset of deep learning, offering unparalleled efficiency in extracting hierarchical features. Their ability to automate complex tasks has reshaped industries, from healthcare to autonomous driving.

By democratizing feature engineering, these models reduce the need for manual intervention. This shift has accelerated adoption rates, with 80% of computer vision systems now relying on convolutional architectures. NVIDIA’s advancements in GPU technology have further enhanced their computational efficiency, making them indispensable in modern machine learning.

CNNs as a Subset of Deep Learning

Convolutional systems are classified under the umbrella of deep learning due to their layered structure. Each layer extracts increasingly abstract features, enabling precise pattern recognition. IBM’s Watson visual recognition platform exemplifies their impact, offering scalable solutions for diverse applications.

Transfer learning paradigms have expanded their utility. Pre-trained models like ResNet allow developers to fine-tune systems for specific tasks, reducing training time and resource consumption. This approach has democratized access to advanced machine learning tools, empowering smaller organizations to innovate.

Impact on AI and Machine Learning

The integration of convolutional models has driven significant advancements in machine learning. Their ability to process vast datasets efficiently has enabled breakthroughs in areas like medical imaging and facial recognition. However, ethical concerns, particularly around privacy, remain a critical consideration.

Research output has surged, with thousands of papers published annually on convolutional architectures. This growth reflects their importance in pushing the boundaries of deep learning. The market is projected to reach $49.6 billion by 2026, underscoring their widespread adoption.

| Aspect | CNNs | Other Deep Learning Models |

|---|---|---|

| Feature Extraction | Automatic, hierarchical | Manual or less efficient |

| Applications | Image, video, medical imaging | Text, time series, speech |

| Computational Needs | High (GPU-dependent) | Variable |

As convolutional systems continue to evolve, their integration into everyday technologies will deepen. From smart cities to personalized healthcare, their impact on machine learning and deep learning will remain transformative.

Future of CNNs

Emerging technologies are redefining the capabilities of convolutional architectures. These systems are evolving to handle more complex tasks, driven by innovations in hardware and algorithms. The focus is on improving efficiency, scalability, and adaptability across diverse applications.

Emerging Trends in CNN Research

Neuromorphic computing is paving the way for brain-inspired spiking models. These systems mimic biological neurons, offering energy-efficient operations. Quantum prototypes are also gaining traction, promising exponential speedups in processing visual data.

Edge computing optimizations are enabling real-time analysis on devices with limited resources. Automated architecture search (AutoML) is streamlining the design process, reducing manual intervention. NVIDIA’s Run:ai orchestration platform enhances resource management, ensuring optimal performance.

Potential Applications in Various Industries

In healthcare, 3D convolution is revolutionizing medical imaging. These models provide detailed insights, improving diagnostic accuracy. Federated learning implementations ensure data privacy while enabling collaborative model training.

Pruning techniques are enhancing sustainability by reducing computational demands. Real-time video analysis is expanding into markets like surveillance and autonomous systems. AR/VR integration is unlocking new possibilities in entertainment and education.

These advancements highlight the value of convolutional architectures in addressing modern challenges. By adopting innovative approaches, industries can leverage these systems for transformative solutions.

Conclusion

The transformative power of convolutional architectures reshapes how machines interpret visual data. These systems, classified under deep learning, excel in extracting hierarchical features, making them indispensable in modern machine learning. Their layered structure, combining convolution and pooling, sets them apart from traditional models.

Historically, innovations like AlexNet and ResNet have driven advancements in neural networks, enabling breakthroughs in fields like healthcare and autonomous driving. However, challenges like computational demands and overfitting persist, requiring ongoing research and optimization.

Looking ahead, convolutional neural networks will continue to dominate vision tasks, supported by leaders like IBM and NVIDIA. Implementers should focus on ethical AI development and leverage pre-trained models for efficiency. For deeper insights, explore this comprehensive guide on their applications and challenges.

FAQ

What is a Convolutional Neural Network (CNN)?

A Convolutional Neural Network is a specialized architecture designed for processing structured grid data, such as images. It uses convolutional layers to extract features and pooling layers to reduce dimensionality.

How do CNNs differ from other neural networks?

CNNs excel in handling spatial data like images due to their convolutional layers, which automatically detect patterns. Unlike feedforward networks, they reduce the need for manual feature extraction.

What are the core components of a CNN?

The main components include convolutional layers for feature extraction, pooling layers for dimensionality reduction, and fully connected layers for classification tasks.

How do CNNs learn features from images?

CNNs use filters and kernels to scan input images, identifying edges, textures, and other patterns. These features are progressively refined through multiple layers.

What are some common applications of CNNs?

CNNs are widely used in image recognition, video analysis, natural language processing, and medical imaging for tasks like tumor detection.

What challenges are associated with CNNs?

Challenges include high computational complexity, the risk of overfitting, and the need for large datasets to train effectively.

How does backpropagation work in CNNs?

Backpropagation adjusts the weights of the network by calculating the gradient of the loss function. This process helps minimize errors during training.

What is the role of pooling layers in CNNs?

Pooling layers reduce the spatial size of feature maps, lowering computational load and preventing overfitting by summarizing regions of the input.

How have CNN architectures evolved over time?

From early models like LeNet to advanced architectures like ResNet and VGGNet, CNNs have grown deeper and more efficient, achieving state-of-the-art performance in visual recognition tasks.

What is the future of CNNs in AI and machine learning?

Emerging trends include lightweight models for edge devices, applications in autonomous systems, and integration with other AI techniques for enhanced performance.